Apache NiFi vs. Apache Airflow – Real-Time vs. Batch Data Orchestration

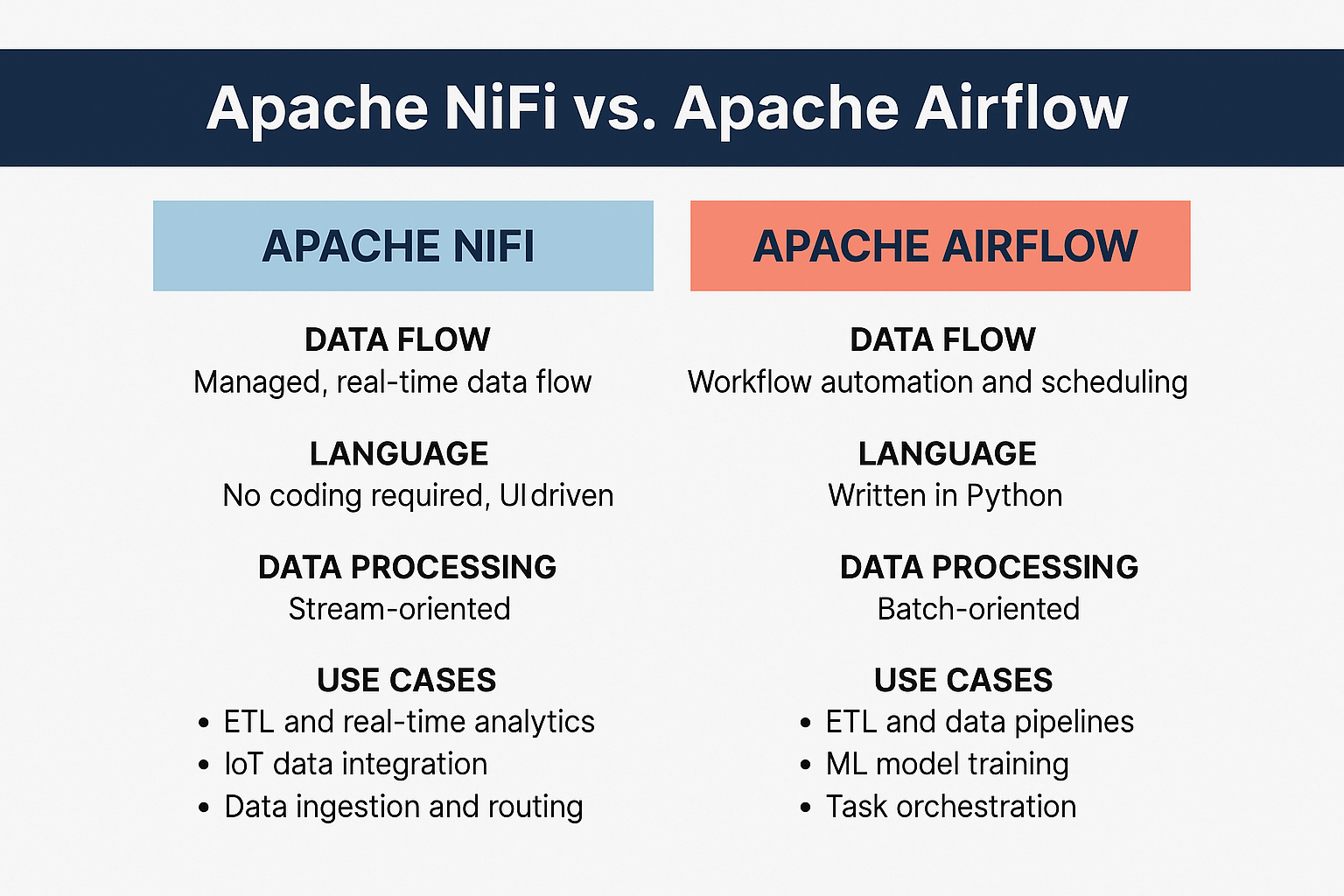

The landscape of data orchestration has evolved dramatically, with organizations facing the critical decision of choosing between Apache NiFi and Apache Airflow for their data pipeline needs. While both are powerful open-source platforms, they serve fundamentally different purposes in the data ecosystem, with NiFi excelling at real-time data ingestion and streaming workflows, and Airflow dominating batch processing and complex workflow orchestration.

Understanding the Core Differences

Apache NiFi: The Real-Time Data Flow Engine

Apache NiFi is a dataflow system based on flow-based programming concepts, designed primarily for real-time data ingestion, transformation, and distribution. Originally developed by the NSA and later donated to the Apache Foundation, NiFi provides a visual, drag-and-drop interface that allows users to design complex data flows without extensive coding.

Key Characteristics:

- Real-time processing: Continuously processes data as it flows through the system

- Visual interface: Web-based graphical user interface for designing data flows

- Data provenance: Comprehensive tracking of data lineage from source to destination

- Back pressure management: Automatic flow control to prevent system overload

Apache Airflow: The Batch Workflow Orchestrator

Apache Airflow is an open-source platform for developing, scheduling, and monitoring batch-oriented workflows. Built around Directed Acyclic Graphs (DAGs), Airflow excels at orchestrating complex, interdependent tasks that need to run on specific schedules.

Key Characteristics:

- Batch processing: Designed for scheduled, discrete data processing tasks

- Code-based workflows: DAGs defined programmatically using Python

- Advanced scheduling: Sophisticated scheduling capabilities including cron-based and data-aware scheduling

- Extensive integrations: Over 1,500 pre-built operators and hooks for various systems

Architecture and Design Philosophy

NiFi Architecture

NiFi operates within a JVM environment with several core components working together to manage data flows:

- Flow Controller: The central brain managing thread allocation and scheduling

- Web Server: Hosts the HTTP-based command and control API

- FlowFile Repository: Tracks the state of data objects in the system

- Content Repository: Stores the actual data content

- Provenance Repository: Maintains detailed audit trails

NiFi’s architecture supports zero-leader clustering, where Apache ZooKeeper manages cluster coordination and failover automatically.

Airflow Architecture

Airflow’s architecture consists of three primary services that work together to execute workflows:

- Scheduler: Polls the database for task state changes and manages task lifecycles

- Web Server: Provides the user interface and REST API

- Workers: Execute individual tasks and manage log collection

- Database: Central metadata store that’s critical for all operations

The architecture supports multiple executors including Sequential, Local, Celery, and Kubernetes executors for different scaling needs.

Processing Paradigms: Real-Time vs. Batch

Real-Time Data Processing with NiFi

NiFi excels in scenarios requiring continuous data processing with minimal latency. Its streaming-first architecture makes it ideal for:

- IoT data collection: Real-time ingestion from sensors and devices

- Log aggregation: Continuous collection and processing of system logs

- Event streaming: Processing social media feeds and real-time analytics

- Data integration: Moving data between systems as it becomes available

The platform’s ability to handle back pressure automatically prevents system overloads when downstream systems cannot keep up with data flow rates.

Batch Processing Excellence with Airflow

Airflow’s strength lies in orchestrating complex batch workflows that process large volumes of data at scheduled intervals. Common use cases include:

- ETL pipelines: Extract, transform, and load operations for data warehouses

- Data migration: Moving large datasets between systems

- Machine learning workflows: Training, evaluation, and deployment of ML models

- Reporting and analytics: Scheduled generation of business reports

Airflow’s DAG-based approach ensures tasks execute in the correct order with proper dependency management.

User Experience and Ease of Use

NiFi’s Visual Approach

NiFi’s drag-and-drop interface makes it accessible to users with limited programming experience. The visual canvas allows users to:

- Connect processors with simple drag-and-drop operations

- Configure data flows through intuitive property panels

- Monitor data flow in real-time through the web interface

- Modify flows at runtime without stopping the system

Airflow’s Code-Centric Model

Airflow requires Python programming knowledge to create and maintain DAGs. This approach offers:

- Flexibility: Full programming capabilities for complex logic

- Version control: DAGs can be managed like any other code

- Dynamic workflows: Ability to generate workflows programmatically

- Testing capabilities: Standard software testing practices apply

Scalability and Performance

NiFi Scaling Characteristics

NiFi supports both horizontal and vertical scaling:

- Horizontal scaling: Zero-leader clustering for distributed processing

- Vertical scaling: Increasing concurrent tasks per processor

- Edge deployment: MiNiFi for lightweight edge computing scenarios

- Site-to-site communication: Efficient data transfer between NiFi instances

Airflow Scaling Options

Airflow provides multiple execution models for different scaling needs:

- Sequential Executor: Single-threaded execution for development

- Local Executor: Multi-threaded execution on a single machine

- Celery Executor: Distributed execution across multiple workers

- Kubernetes Executor: Container-based execution for cloud-native deployments

Integration Capabilities

Both platforms offer extensive integration options, but with different focuses:

NiFi Integrations:

- Over 300 built-in processors for various data sources

- Support for HDFS, Kafka, HTTP/HTTPS, FTP, databases

- Custom processors can be developed in Java

Airflow Integrations:

- Over 1,500 pre-built operators, hooks, and sensors

- Extensive cloud platform support (AWS, GCP, Azure)

- Custom operators can be developed in Python

Decision Framework: When to Choose Which

Choose Apache NiFi When:

- Real-time processing is a primary requirement

- Visual workflow design is preferred over coding

- Data provenance and lineage tracking is critical

- Back pressure management is needed for streaming data

- Teams have limited programming experience

Choose Apache Airflow When:

- Batch processing and scheduled workflows are primary needs

- Complex task dependencies need to be managed

- Python programming flexibility is required

- Extensive third-party integrations are needed

- Enterprise-grade workflow orchestration is the goal

Performance and Reliability Comparison

| Aspect | Apache NiFi | Apache Airflow |

| Processing Type | Real-time, continuous streaming | Batch, scheduled intervals |

| Latency | Low latency, near real-time | Higher latency, batch intervals |

| Data Freshness | Up-to-date, current data | Data reflects past state |

| Error Handling | Built-in back pressure and configurable error paths | Retry mechanisms and task state monitoring |

| Resource Usage | Continuous resource consumption | Periodic resource spikes during batch windows |

| Fault Tolerance | Guaranteed delivery with write-ahead logs | Retry capabilities with checkpointing |

Conclusion

The choice between Apache NiFi and Apache Airflow ultimately depends on your organization’s specific data processing requirements. NiFi excels in real-time data ingestion scenarios where continuous processing, visual workflow design, and built-in data provenance are paramount. Airflow dominates in batch processing environments requiring complex workflow orchestration, advanced scheduling, and extensive third-party integrations.

Many organizations find value in using both tools complementarily – leveraging NiFi for real-time data ingestion and initial processing, then using Airflow to orchestrate downstream batch analytics and reporting workflows. This hybrid approach maximizes the strengths of each platform while addressing the full spectrum of data orchestration needs in modern data architectures.