Introduction

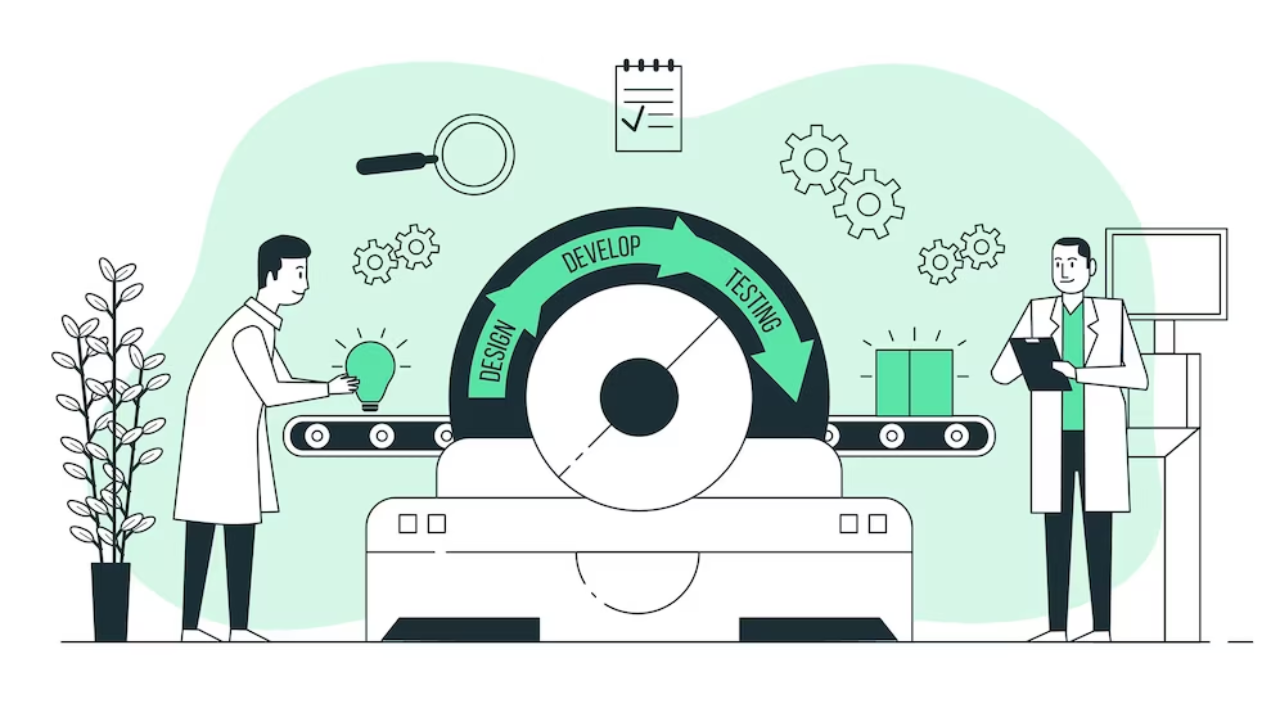

Machine Learning Operations, commonly known as ML Ops, is a set of practices and tools that facilitate the seamless integration of data science and machine learning models into production systems. It is an emerging field that aims to streamline the deployment, management, and scaling of machine learning models. In this blog, we will explore the concept of ML Ops, its significance in the data science workflow, its components, best practices, challenges, and its role in shaping the future of data-driven applications.

The Need for ML Ops

Machine Learning has revolutionized the way businesses leverage data to gain valuable insights, make informed decisions, and automate complex processes. ML models are now essential components of various applications, ranging from recommendation systems and fraud detection to natural language processing and autonomous vehicles. However, the journey from developing a successful ML model to deploying and managing it in a production environment is not without its challenges. The increasing adoption of machine learning models has led to a gap between data science experimentation and actual production deployment. ML Ops addresses this gap by providing a systematic approach to manage models throughout their lifecycle, from development to production, monitoring, and updates.

Let’s explore the key reasons that underscore the need for ML Ops.

1. Seamless Transition from Development to Production: In the early stages of ML development, data scientists typically work in isolated environments with small datasets and limited computing resources. While this environment is conducive for experimentation and model refinement, it often fails to capture the complexities and constraints of real-world production systems. ML Ops ensures that the transition from the data scientist’s development environment to the production environment is seamless, avoiding surprises and bottlenecks during the deployment process.

2. Version Control and Reproducibility: As ML models evolve, it becomes crucial to track changes, iterations, and improvements made to the model and its associated data. Without proper version control, it becomes challenging to reproduce and debug issues that may arise during deployment. ML Ops facilitates version control for models, code, and data, ensuring that all changes are tracked, well-documented, and easily accessible.

3. Ensuring Model Reliability and Consistency: In production, ML models are exposed to real-world data, which might differ from the data used during development. As a result, models may experience performance degradation or fail altogether due to factors such as data drift or changes in data distributions. ML Ops incorporates monitoring and feedback loops to detect and address such issues promptly, ensuring that models remain reliable and consistent over time.

4. Efficient Model Deployment and Updates: Manually deploying and updating ML models can be a time-consuming and error-prone process. ML Ops leverages automation and continuous deployment techniques to streamline the deployment process, reducing the time to production and minimizing the risk of errors during updates.

5. Scalability and Resource Management: Scalability is a key consideration when deploying ML models in production, as applications often experience varying workloads and demands. ML Ops helps optimize resource usage, ensuring that models can handle increased traffic and scale appropriately. This prevents overprovisioning or underutilization of resources, resulting in cost-effective and efficient deployments.

6. Model Monitoring and Performance Management: Once a model is deployed, its performance must be continuously monitored to identify anomalies or degradation in performance. ML Ops provides mechanisms to track model performance in real-time, allowing for proactive maintenance and updates to ensure optimal performance.

7. Collaboration between Data Science and Operations Teams: ML models are developed by data scientists, but their deployment and maintenance are the responsibility of DevOps and IT operations teams. ML Ops facilitates collaboration between these teams, breaking down silos and fostering effective communication to achieve shared goals.

8. Security and Privacy Compliance: Machine learning models often handle sensitive data, making security and privacy compliance crucial. ML Ops professionals work with security teams to implement robust security measures, encryption techniques, and data access controls to protect sensitive information and ensure regulatory compliance.

Key Components of ML Ops

ML Ops is a multi-faceted discipline that encompasses various components and tools. This section will delve into the essential building blocks of ML Ops, including version control for models, model training automation, containerization, continuous integration/continuous deployment (CI/CD), and model monitoring.

ML Ops brings together various aspects of DevOps and Data Science to enable efficient and reliable ML model workflows. The key components of ML Ops include:

- Version Control: Just like in software development, version control is essential for ML Ops. It helps track changes made to ML code, data, and model configurations, making it easier to collaborate, reproduce results, and rollback to previous versions if necessary.

- Continuous Integration and Continuous Deployment (CI/CD): CI/CD pipelines automate the process of building, testing, and deploying ML models. This ensures that changes are thoroughly tested and quickly deployed to production, reducing the time between model iterations.

- Data Management: Effective data management is crucial for ML Ops. It involves organizing, storing, and versioning datasets in a way that allows reproducibility and data lineage tracking. Proper data management ensures that models are trained on consistent and accurate data.

- Model Registry: A model registry is a centralized repository that stores trained models along with their metadata, such as version information, performance metrics, and dependencies. It facilitates tracking, sharing, and deployment of models across teams and environments.

- Model Monitoring and Drift Detection: Once deployed, ML models need continuous monitoring to ensure they are performing as expected. ML Ops includes mechanisms to track model performance and detect data drift or concept drift, helping to identify when models need retraining or updating.

- Infrastructure Management: ML Ops addresses infrastructure requirements for ML models. It involves provisioning and managing resources like compute, storage, and networking needed for model training, testing, and deployment.

- Dependency Management: Managing dependencies is critical in ML Ops to ensure that the environments for model development, testing, and deployment are consistent and reproducible. Tools like containerization or virtual environments help achieve this.

- Model Testing and Validation: ML Ops emphasizes thorough testing and validation of ML models before deploying them to production. This includes unit tests, integration tests, and validation against real-world data.

- Collaboration and Communication: Effective collaboration and communication between data scientists, ML engineers, and operations teams are essential for successful ML Ops implementation. Shared knowledge and clear communication channels facilitate smoother workflows.

- Security and Governance: ML Ops considers security and governance aspects, such as access controls, data privacy, and compliance requirements. It ensures that ML models are developed and deployed in a secure and compliant manner.

- Automated Model Retraining: ML Ops incorporates mechanisms to automatically trigger model retraining when new data becomes available or when model performance drops below a certain threshold. This helps to keep models up-to-date and accurate over time.

- Error Tracking and Logging: ML Ops implements robust error tracking and logging to detect and diagnose issues in production ML systems. This allows teams to respond quickly to problems and improve the overall reliability of the ML infrastructure.

By adopting these key components, organizations can establish a well-structured ML Ops framework to accelerate the development and deployment of machine learning models while maintaining reliability and reproducibility.

CI/CD for Machine Learning

Continuous Integration and Continuous Deployment are critical practices in software development, and they play a crucial role in ML Ops as well.

CI/CD (Continuous Integration/Continuous Deployment) for Machine Learning (ML) is a set of practices and tools that automate the process of building, testing, and deploying ML models. It aims to enable faster and more reliable model development and deployment by automating repetitive tasks and ensuring consistent workflows. Here’s an overview of CI/CD for Machine Learning.

- Version Control: Like traditional software development, version control is the foundation of CI/CD for ML. It involves using tools like Git to track changes to ML code, data, and model configurations. Version control allows teams to collaborate, review changes, and roll back to previous states if needed.

- Automated Builds: CI/CD pipelines automate the process of building ML models. This includes installing dependencies, setting up the environment, and preparing data for training.

- Automated Testing: Testing is a critical aspect of CI/CD for ML. Different types of tests are performed to ensure model quality, such as unit tests for individual components, integration tests for the entire pipeline, and performance tests to evaluate model accuracy and efficiency.

- Continuous Integration: In CI/CD for ML, developers regularly integrate their code into a shared repository. Continuous integration ensures that changes made by multiple team members are combined and tested frequently. It helps identify and address integration issues early on.

- Continuous Deployment: Continuous deployment involves automatically deploying the ML model to production or staging environments after successful testing. This automation reduces the risk of manual errors during deployment and accelerates the time to market for ML models.

- Environment Reproducibility: CI/CD for ML emphasizes creating reproducible environments. This is often achieved using containerization technologies like Docker, allowing models to be packaged with all their dependencies, making deployments consistent across different environments.

- Artifact Management: The artifacts produced during the CI/CD pipeline, such as trained models, evaluation metrics, and logs, are stored in an artifact repository. This makes it easy to track and share different versions of the model.

- Model Monitoring: In CI/CD for ML, monitoring is not limited to the deployment phase. Models are continuously monitored in production to detect performance degradation, data drift, or other issues that might require retraining or updates.

- Automated Retraining: When data drift is detected or new data becomes available, CI/CD for ML can automatically trigger retraining of the model and redeploy it to production, ensuring that the model stays up to date.

- Feedback Loop: CI/CD for ML emphasizes a feedback loop between development and operations teams. Insights from production monitoring and user feedback are fed back into the development process, driving continuous improvement.

By adopting CI/CD practices for Machine Learning, organizations can achieve faster development cycles, reduce manual errors, and deliver more reliable and up-to-date ML models to end-users. It fosters collaboration, automation, and continuous improvement, aligning ML development with modern software engineering best practices.

Model Versioning and Management

Versioning machine learning models is essential to track changes, ensure reproducibility, and facilitate collaboration among data scientists. Model versioning and management are essential aspects of Machine Learning Operations (ML Ops) and are crucial for maintaining control and traceability over the various iterations of ML models. Proper versioning and management help ensure reproducibility, facilitate collaboration among team members, and make it easier to deploy and monitor models in production. We will discuss now the approach and best practices for model versioning and management.

- Version Control System (VCS): A version control system, such as Git, is the foundation of model versioning. VCS allows data scientists and ML engineers to track changes made to ML code, data preprocessing steps, hyperparameters, and other model-related files. It enables collaboration, rollback to previous versions, and documentation of the model development history.

- Model Artifacts: Model artifacts include all the files necessary to define and deploy an ML model successfully. These artifacts may include the model architecture, weights, hyperparameters, data preprocessing scripts, and any other relevant configuration files.

- Model Registry: A model registry is a centralized repository that stores trained models along with their metadata and related artifacts. It acts as a catalog of all the versions of models developed, allowing teams to access, compare, and deploy specific versions as needed.

- Metadata Management: Alongside the model artifacts, metadata plays a crucial role in model versioning and management. Metadata can include information such as the date of training, data used, performance metrics, and the name of the data scientist or ML engineer responsible for the model’s development.

- Semantic Versioning: Semantic versioning assigns version numbers to models in a structured way, typically in the format MAJOR.MINOR.PATCH. Changes to the model architecture or training data may increment the MAJOR version, hyperparameter changes might increment the MINOR version, and bug fixes might increment the PATCH version.

- Model Deployment Management: ML Ops involves deploying models to production, and versioning plays a critical role in managing different versions of models in a production environment. It ensures that the right model version is deployed and can be rolled back if necessary.

- Automated Model Deployment: With proper versioning and management, it becomes easier to automate model deployment processes. CI/CD pipelines can use model versioning information to deploy the latest stable model version to production environments.

- Model Monitoring and Feedback Loop: Model versioning also extends to monitoring the performance of deployed models in production. Feedback from monitoring helps teams identify when a model version needs updating or retraining.

- Collaboration and Collaboration: Model versioning promotes collaboration within data science and ML teams. Team members can easily share models, track changes, and provide feedback during the model development lifecycle.

- Model Governance: Model versioning is a critical component of model governance and compliance. It allows organizations to maintain an audit trail of model changes and ensure that specific versions were used for specific tasks.

By implementing effective model versioning and management practices, organizations can efficiently handle the complexity of ML model development, deployment, and maintenance, ultimately leading to more robust and reliable ML solutions.

Model Monitoring and Performance Tracking

Model monitoring and performance tracking are crucial aspects of Machine Learning Operations (ML Ops) that ensure the deployed ML models continue to perform well in real-world production environments. Monitoring allows teams to detect issues, measure model performance, and make informed decisions about model maintenance and updates. Here we will examine various monitoring techniques and tools used to track model performance, detect anomalies, and trigger model updates when necessary.

- Real-time Monitoring: Model monitoring involves continuously collecting real-time data from the deployed ML model’s inputs and outputs. This data includes predictions, inference times, and any relevant feedback from users or downstream systems.

- Performance Metrics: Defining relevant performance metrics is essential for tracking model effectiveness. These metrics can vary depending on the specific use case but may include accuracy, precision, recall, F1-score, area under the receiver operating characteristic curve (AUC-ROC), or mean average precision (mAP) for object detection models.

- Data Drift Detection: Data drift occurs when the distribution of incoming data changes over time, affecting model performance. Monitoring for data drift helps detect shifts in the input data and can trigger alerts or retraining pipelines when drift is detected.

- Concept Drift Detection: Concept drift refers to situations where the underlying relationship between input features and the target variable changes over time. Concept drift monitoring ensures the model is still valid and adapts to new patterns in the data.

- Error Analysis: Detailed error analysis helps understand model weaknesses and areas for improvement. It involves examining misclassified samples, false positives/negatives, and the patterns or features that contribute to model errors.

- Alerting and Anomaly Detection: Automatic alerts are set up to notify teams when model performance drops below predefined thresholds or when anomalies in predictions occur. This allows teams to respond quickly to performance degradation.

- Logging and Visualization: Logging predictions, performance metrics, and other relevant information in a structured format facilitates visualization and analysis. Visualization tools can help data scientists and stakeholders understand model behavior better.

- Retraining Triggers: Based on predefined rules or performance degradation, model monitoring can trigger automated retraining pipelines. This ensures that models stay up-to-date and effective as the underlying data changes.

- Feedback Loop: Insights from model monitoring are fed back into the model development process. This feedback loop helps data scientists improve model performance and address potential issues discovered during production.

- A/B Testing: A/B testing, or experimentation, is sometimes used to compare the performance of different versions of a model in production. It helps in identifying the best-performing model and validating new model changes.

- User Feedback Integration: Integrating user feedback into model monitoring provides additional context and validation of the model’s performance. User feedback can help identify areas where the model needs improvement or where it excels.

By establishing a robust model monitoring and performance tracking system, organizations can ensure that their ML models maintain high performance, adapt to changes in data and user behavior, and deliver valuable insights and predictions in real-world scenarios.

Scalability and Resource Management

Scalability and resource management are critical considerations in Machine Learning Operations (ML Ops) to ensure that ML systems can handle growing workloads and efficiently utilize available resources. As machine learning applications scale, managing computational resources becomes increasingly complex. Scalability refers to the ability of an ML system to handle increased demand, both in terms of data volume and computational requirements, without sacrificing performance. Resource management involves optimizing the allocation and utilization of computational resources to achieve efficient and cost-effective ML model training and deployment. ML Ops addresses these challenges by optimizing resource utilization, load balancing, and automating scalability. Here we will discuss strategies to manage resources effectively.

- Horizontal and Vertical Scaling: Horizontal scaling involves adding more compute nodes or instances to distribute the workload across multiple machines. Vertical scaling, on the other hand, involves increasing the computational power of individual machines. ML Ops should facilitate both forms of scaling to accommodate growing data and model complexity.

- Distributed Computing: Distributed computing frameworks like Apache Spark or TensorFlow’s distributed training capabilities allow ML tasks to be divided across multiple nodes, enabling parallel processing and faster training times.

- Auto-scaling: Auto-scaling is the automatic adjustment of resources based on demand. ML Ops can leverage auto-scaling to automatically increase or decrease the number of compute instances based on factors like data volume or real-time prediction requests.

- Resource Allocation and Containerization: Efficient resource management involves allocating the right amount of memory, CPU, and GPU resources to ML tasks. Containerization technologies like Docker and Kubernetes provide isolation and resource control, ensuring efficient use of resources across multiple ML models and services.

- Provisioning and Orchestration: ML Ops should include mechanisms for provisioning and orchestrating resources, ensuring that the necessary infrastructure is available when needed and released when no longer required.

- Model Compression and Optimization: To improve scalability, ML models can be compressed and optimized without significantly sacrificing performance. Techniques like quantization, pruning, and knowledge distillation reduce model size and computational requirements.

- Cloud Services: Cloud platforms like AWS, Google Cloud, and Azure provide scalable and on-demand resources for ML workloads. Using cloud services, organizations can easily scale their ML infrastructure up or down based on workload demands.

- Monitoring and Performance Analysis: Monitoring resource usage and performance metrics is essential for identifying bottlenecks, underutilized resources, and areas for optimization. Monitoring helps in making informed decisions regarding resource allocation and scaling strategies.

- Cost Optimization: Resource management should consider cost optimization, where resources are allocated efficiently to avoid unnecessary expenses. This may involve turning off resources during idle periods or choosing cost-effective instance types for different tasks.

- Predictive Scaling: Advanced ML Ops implementations can use predictive analytics to forecast future demand and proactively scale resources before the demand spike occurs.

By implementing effective scalability and resource management practices, ML Ops can ensure that ML systems can handle increasing workloads efficiently, deliver high-performance predictions, and optimize resource utilization to control costs effectively. This allows organizations to meet growing demands while maintaining cost-effectiveness and reliability.

Security and Privacy Considerations

Security and privacy considerations are of paramount importance in Machine Learning Operations (ML Ops), especially when dealing with sensitive data and deploying ML models in production environments. Addressing security and privacy concerns ensures the protection of data, models, and the overall ML infrastructure. Here are some key considerations:

- Data Security: Protecting data at all stages of the ML lifecycle is crucial. This includes encrypting data both in transit and at rest, implementing access controls, and using secure data storage solutions. Data masking and anonymization techniques can also be used to preserve privacy.

- Model Security: ML models can be valuable assets, and protecting them is essential. Secure version control and access controls on the model registry help prevent unauthorized access or tampering with the models.

- Secure Communication: Ensure that all communication between components of the ML system, such as between data sources and model servers, is encrypted using secure protocols like HTTPS.

- Secure APIs: If ML models are exposed through APIs, secure authentication and authorization mechanisms should be implemented to ensure only authorized users or applications can access the API.

- Vulnerability Management: Regularly update and patch ML infrastructure components, libraries, and dependencies to address known security vulnerabilities.

- Access Controls: Implement fine-grained access controls to limit access to data, models, and resources based on roles and responsibilities within the organization.

- Audit Logging: Maintain comprehensive audit logs to track and monitor access to data and models. These logs can be instrumental in identifying and investigating potential security incidents.

- Secure Model Deployment: Use secure deployment practices, such as container security, to ensure that ML models are not exposed to vulnerabilities during deployment.

- Data Privacy Compliance: Ensure compliance with relevant data privacy regulations, such as GDPR or CCPA, when handling personally identifiable information (PII) or sensitive data.

- Secure Collaboration: Encourage secure collaboration among team members. Utilize secure communication channels for sharing sensitive information and restrict access to confidential documents.

- User Authentication and Authorization: Implement strong authentication mechanisms for users accessing the ML system. Multi-factor authentication (MFA) can add an extra layer of security.

- Security Testing: Conduct regular security assessments, penetration testing, and code reviews to identify and address potential security weaknesses in the ML system.

- Third-party Libraries: Be cautious when using third-party ML libraries and frameworks. Verify their security credentials and maintain a list of approved libraries to minimize potential risks.

- Data Retention Policies: Establish data retention policies to ensure that data is retained only for as long as necessary and is securely disposed of when no longer needed.

By implementing robust security and privacy measures, organizations can safeguard their data, models, and ML infrastructure from potential threats and ensure compliance with data protection regulations. Security and privacy considerations should be integrated into every aspect of ML Ops, from data acquisition to model deployment and beyond.

Collaboration between Data Science and DevOps Teams

Collaboration between Data Science and DevOps teams is essential to successful Machine Learning Operations (ML Ops) and the smooth deployment and maintenance of machine learning models. Effective collaboration ensures that both teams work together seamlessly, leveraging their respective expertise to deliver high-quality ML solutions. Here are some key aspects of fostering collaboration between Data Science and DevOps teams:

- Clear Communication Channels: Establish clear and open communication channels between the Data Science and DevOps teams. Regular meetings, stand-ups, and collaboration tools can facilitate effective communication.

- Shared Goals and Objectives: Align the goals and objectives of both teams to ensure they are working towards common outcomes. This helps in avoiding conflicts and promoting a sense of shared responsibility.

- Collaborative Planning: Include both Data Science and DevOps teams in the planning process for ML projects. Collaboration from the early stages ensures that deployment and infrastructure considerations are addressed from the beginning.

- Cross-Team Training and Knowledge Sharing: Encourage cross-team training and knowledge sharing sessions. This helps in developing a better understanding of each team’s processes, tools, and requirements.

- Version Control and GitOps: Utilize version control systems like Git and adopt GitOps practices to manage changes in ML code, model configurations, and infrastructure as code. This ensures transparency and traceability in the development process.

- Continuous Integration and Continuous Deployment (CI/CD): Implement CI/CD pipelines that automate the integration, testing, and deployment of ML models. Both teams should work together to design and maintain these pipelines.

- Infrastructure as Code (IaC): Embrace Infrastructure as Code principles to define and manage ML infrastructure. This allows both teams to collaborate on infrastructure changes and easily replicate environments.

- Shared Tools and Environments: Foster the use of shared tools and environments to streamline collaboration. This can include using containers for consistent development and deployment environments.

- Joint Testing and Validation: Collaborate on testing and validation processes. DevOps can assist in automating testing, while Data Science can provide valuable insights into model evaluation.

- Feedback Loop: Establish a feedback loop between Data Science and DevOps teams. Feedback from production environments can help inform model improvements, infrastructure changes, and overall system performance.

- Security and Compliance Collaboration: Collaborate on security and compliance aspects, ensuring that models and data are handled securely and in accordance with regulatory requirements.

- Post-Deployment Monitoring: Jointly monitor the performance of ML models in production. Regular meetings to discuss monitoring results and address issues can lead to prompt actions for improvement.

- Shared Responsibility: Promote a culture of shared responsibility between both teams. This encourages ownership of the ML system as a whole and reduces silos between Data Science and DevOps.

By fostering a collaborative environment between Data Science and DevOps teams, organizations can streamline the ML Ops process, improve model deployment, and deliver more reliable and efficient machine learning solutions to end-users.

Cloud-Native ML Ops

Cloud-native ML Ops refers to the practice of deploying and managing machine learning (ML) applications using cloud-native technologies and principles. It combines ML Ops practices with cloud computing capabilities to enable efficient, scalable, and flexible ML workflows. Cloud-native ML Ops leverages the benefits of the cloud, such as on-demand resources, auto-scaling, and managed services, to streamline the development, deployment, and monitoring of ML models. Here are some key aspects of cloud-native ML Ops.

- Containerization: Cloud-native ML Ops often involves packaging ML models and their dependencies into containers (e.g., Docker) to ensure consistency and portability across different environments.

- Kubernetes Orchestration: Kubernetes, an open-source container orchestration platform, is commonly used in cloud-native ML Ops to manage containerized ML workloads efficiently. Kubernetes enables automatic scaling, load balancing, and self-healing of ML applications.

- Infrastructure as Code (IaC): IaC principles are applied to define and manage ML infrastructure in a cloud-native ML Ops environment. Infrastructure configurations are codified, version-controlled, and automatically deployed using tools like Terraform.

- Managed Services: Cloud providers offer various managed services for ML, such as AWS SageMaker, Google Cloud AI Platform, or Azure Machine Learning. These services provide pre-configured ML environments, automated model training, and deployment, simplifying the ML Ops process.

- Serverless Computing: Serverless platforms, like AWS Lambda or Google Cloud Functions, can be utilized for executing ML inference tasks on-demand without the need to manage infrastructure explicitly.

- Auto-Scaling: Cloud-native ML Ops leverages auto-scaling capabilities to dynamically adjust the number of compute resources based on the workload, allowing efficient resource utilization.

- Monitoring and Logging: Cloud-native ML Ops takes advantage of cloud monitoring and logging services to gain insights into the performance and behavior of ML applications.

- Continuous Integration and Continuous Deployment (CI/CD): CI/CD pipelines are set up to automate the build, test, and deployment of ML models and infrastructure changes in the cloud environment.

- Data Management and Storage: Cloud-native ML Ops leverages cloud storage solutions (e.g., AWS S3, Google Cloud Storage) for efficient data storage and versioning.

- Cost Optimization: Cloud-native ML Ops allows organizations to optimize costs by using pay-as-you-go pricing models and automatically scaling resources as needed.

- Security and Compliance: Cloud providers offer robust security features, and cloud-native ML Ops incorporates security best practices to ensure data and model protection.

- Flexibility and Agility: Cloud-native ML Ops provides the flexibility to experiment with different ML tools and technologies, making it easier to adapt to changing requirements and stay up-to-date with the latest advancements.

By adopting cloud-native ML Ops practices, organizations can accelerate their ML development cycles, improve resource utilization, and ensure that their ML applications are scalable, reliable, and cost-effective.

Challenges in Implementing ML Ops

Implementing ML Ops comes with its own set of challenges, as it involves integrating data science, software engineering, and operations practices to ensure efficient and reliable machine learning workflows. Some common challenges in implementing ML Ops include:

- Cultural Shift: ML Ops requires a cultural shift within the organization, as it involves breaking down silos between data science and operations teams and fostering a collaborative mindset.

- Data Quality and Governance: Ensuring data quality and maintaining data governance throughout the ML lifecycle can be challenging. Data inconsistencies or inaccuracies can lead to biased or unreliable models.

- Data Schema Changes: Changes to the data schema can occur due to updates in the data sources or data preprocessing pipelines. These changes can cause compatibility issues with existing ML models and result in errors during model deployment or inference. Handling data schema changes effectively requires robust data validation and versioning mechanisms.

- Version Control and Reproducibility: Managing version control of ML models, datasets, and configurations to ensure reproducibility of results can be complex, especially when dealing with large and evolving datasets.

- Infrastructure Management: Setting up and managing ML infrastructure, including hardware, software, and dependencies, can be challenging, especially for large-scale ML projects.

- Dependency Management: Managing dependencies, especially in complex ML pipelines with numerous libraries and frameworks, can be difficult to maintain and reproduce consistently.

- Model Drift: Model drift occurs when the distribution of the input data in production differs from the data on which the model was trained. This can lead to a decline in model performance over time. Detecting and mitigating model drift requires ongoing monitoring and regular model retraining.

- Model Deployment and Monitoring: Deploying ML models in production and monitoring their performance in real-time introduces challenges, such as handling data drift and concept drift. Also, deploying ML models into production involves integrating with existing systems, managing API endpoints, and ensuring the model can handle production-level traffic and security requirements. Automation and standardized deployment practices can help overcome deployment challenges.

- Scalability and Resource Management: Scaling ML models and infrastructure to handle increased workloads and optimizing resource utilization can be complex, particularly when dealing with large-scale models and datasets.

- Continuous Integration and Deployment: Integrating ML workflows into continuous integration and continuous deployment (CI/CD) pipelines introduces challenges in automating model testing and deployment.

- Model Explainability: Interpreting and explaining the decisions made by ML models, especially complex ones like deep learning models, can be challenging. Ensuring model explainability is crucial, especially in applications where transparency and accountability are required. Deploying interpretable models or using techniques like feature importance analysis can help address this hurdle.

- Model Reproducibility: Reproducing the exact results of a trained model can be difficult, especially when dealing with complex ML pipelines, random initialization, or hardware-specific optimizations. Implementing reproducibility techniques like setting random seeds and using containerization can assist in ensuring model reproducibility.

- Monitoring and Model Updates: Monitoring the performance of deployed ML models in production is essential to detect issues early and trigger model updates when necessary. Continuous integration and deployment pipelines can help automate the process of updating models in production environments.

- Security and Privacy Concerns: Ensuring the security and privacy of sensitive data used in ML models and protecting the models themselves from unauthorized access can be challenging.

- Model Governance and Compliance: Implementing model governance to ensure models are audited, validated, and comply with relevant regulations can be complex, particularly for organizations with multiple ML projects.

- Skillset and Expertise: Finding individuals with expertise in both data science and software engineering, and who understand ML Ops practices, can be challenging due to the specialized nature of the field.

- Legacy Systems Integration: Integrating ML Ops practices into existing legacy systems can be challenging, requiring careful planning and coordination.

- Collaboration and Communication: Effective collaboration and communication between data science, DevOps, and other teams involved in the ML Ops process are critical for success. Miscommunication or lack of coordination can lead to inefficiencies and delays in the development and deployment of ML models.

Despite these challenges, implementing ML Ops can bring significant benefits, including improved collaboration, faster development cycles, reliable models, and streamlined deployment processes. Addressing these challenges requires a well-defined strategy, investment in tools and technologies, and a commitment to fostering a culture of collaboration and continuous improvement within the organization.

ML Ops Tools and Frameworks

ML Ops tools and frameworks are essential for streamlining the machine learning development and deployment process, enabling collaboration, automation, and scalability. There are several popular tools and frameworks available that cater to different aspects of ML Ops. Here are some widely used ML Ops tools and frameworks:

- Kubeflow: Kubeflow is an open-source platform built on Kubernetes, designed to streamline the deployment and management of machine learning workflows. It provides tools for training, serving, and scaling ML models, as well as components for data preprocessing and version control.

- MLflow: MLflow is an open-source platform for managing the ML lifecycle. It offers tools for tracking experiments, packaging and sharing models, and managing deployment environments. MLflow supports various ML frameworks like TensorFlow, PyTorch, and scikit-learn.

- TensorFlow Extended (TFX): TFX is an end-to-end platform from TensorFlow for deploying production ML pipelines. It includes components for data preprocessing, model training, evaluation, and deployment, all integrated with TensorFlow’s ecosystem.

- Amazon SageMaker: SageMaker is a fully managed service from AWS that provides an end-to-end platform for building, training, and deploying ML models at scale. It includes pre-built Jupyter notebooks, optimized ML algorithms, and automated model tuning.

- Azure Machine Learning: Azure Machine Learning is a cloud-based service from Microsoft that provides a collaborative environment for building, training, and deploying ML models. It integrates with popular ML frameworks and offers automated machine learning capabilities.

- Google Vertex AI Platform: Google Vertex AI Platform is a fully managed service that allows users to build, train, and deploy ML models on Google Cloud. It offers features like hyperparameter tuning, automated machine learning, and scalable model serving.

- DVC (Data Version Control): DVC is an open-source version control system specifically designed for ML projects. It enables versioning and sharing of ML models, datasets, and configurations, facilitating collaboration and reproducibility.

- Docker and Kubernetes: Docker is a popular containerization platform, while Kubernetes is a container orchestration platform. Together, they form a powerful combination for managing scalable and reproducible ML environments.

- Airflow: Apache Airflow is an open-source workflow automation and scheduling platform. It can be used to define, schedule, and monitor ML workflows, automating tasks such as data preprocessing, model training, and deployment.

- Weights & Biases: Weights & Biases is a platform for experiment tracking, model visualization, and collaboration. It helps data scientists keep track of their experiments, compare results, and share insights with their teams.

- GitLab CI/CD or Jenkins: CI/CD tools like GitLab CI/CD and Jenkins can be used to set up automated pipelines for model training, testing, and deployment, ensuring continuous integration and continuous deployment of ML models.

These are just a few examples of the many ML Ops tools and frameworks available. The choice of tools and frameworks depends on the specific requirements and preferences of the organization and the ML projects being undertaken. Successful ML Ops implementation often involves a combination of multiple tools and platforms to address the diverse needs of the ML development lifecycle.

Skills required for ML Ops

ML Ops, short for Machine Learning Operations, requires a combination of technical, operational, and communication skills to effectively manage the deployment and maintenance of machine learning models in production environments. Below are some essential skills needed for ML Ops:

- Data Science and Machine Learning Knowledge: Understanding machine learning concepts, algorithms, and model development is essential to collaborate effectively with data scientists. ML Ops professionals should grasp the fundamentals of supervised and unsupervised learning, model evaluation, feature engineering, and more.

- Programming Proficiency: Strong programming skills are necessary to work with data, build pipelines, and integrate models into production systems. Python is the most widely used language in ML Ops, but knowledge of other languages like R or Scala can be beneficial.

- Software Development and Version Control: ML Ops professionals must be familiar with software development principles, version control systems (e.g., Git), and best practices for maintaining code repositories. This allows them to manage model versions, track changes, and collaborate efficiently with data scientists and engineers.

- Containerization: Knowledge of containerization tools like Docker is crucial in ML Ops. Containerization helps package machine learning models along with their dependencies, ensuring consistent deployment across different environments.

- Cloud Platforms and Infrastructure: Proficiency in cloud platforms like AWS, GCP, or Azure is valuable for leveraging cloud-based services and infrastructure. Cloud services facilitate scalability, resource management, and cost-effective deployment of ML models.

- Continuous Integration/Continuous Deployment (CI/CD): Understanding CI/CD practices and tools is vital for automating the deployment of machine learning models. This enables rapid iteration and seamless updates to deployed models.

- Model Monitoring and Logging: Monitoring model performance in production is critical to ensure models remain accurate and reliable over time. Skills in setting up monitoring systems and logging mechanisms are crucial for detecting anomalies and triggering model updates.

- Automation and Orchestration: ML Ops professionals should be proficient in using automation tools and workflow orchestrators (e.g., Airflow) to streamline repetitive tasks, manage data pipelines, and automate model deployments.

- Scalability and Resource Management: Understanding resource utilization and scaling strategies is essential to optimize ML model performance and cost-effectively manage computational resources.

- Security and Privacy Awareness: Security is paramount when working with sensitive data and machine learning models. ML Ops professionals should be knowledgeable about securing data, models, and infrastructure to protect against potential threats.

- Communication and Collaboration: Strong communication skills are vital in ML Ops to effectively collaborate with cross-functional teams, including data scientists, software engineers, and DevOps professionals. The ability to convey technical concepts to non-technical stakeholders is crucial for successful project execution.

- Problem-Solving and Troubleshooting: ML Ops professionals should be adept at identifying and resolving issues that may arise during model deployment or maintenance. Troubleshooting skills are essential for ensuring models run smoothly in production.

Combining these skills enables ML Ops professionals to bridge the gap between data science and production, ensuring that machine learning models are deployed and managed effectively in real-world applications.

Conclusion

ML Ops is a critical discipline that bridges the gap between data science experimentation and production deployment of machine learning models. By streamlining the entire model lifecycle, ML Ops ensures that machine learning applications remain reliable, scalable, and maintainable. As the demand for machine learning solutions continues to grow, ML Ops will play an increasingly significant role in enabling data-driven applications and shaping the future of AI.