What is Deep learning?

What is Deep learning?

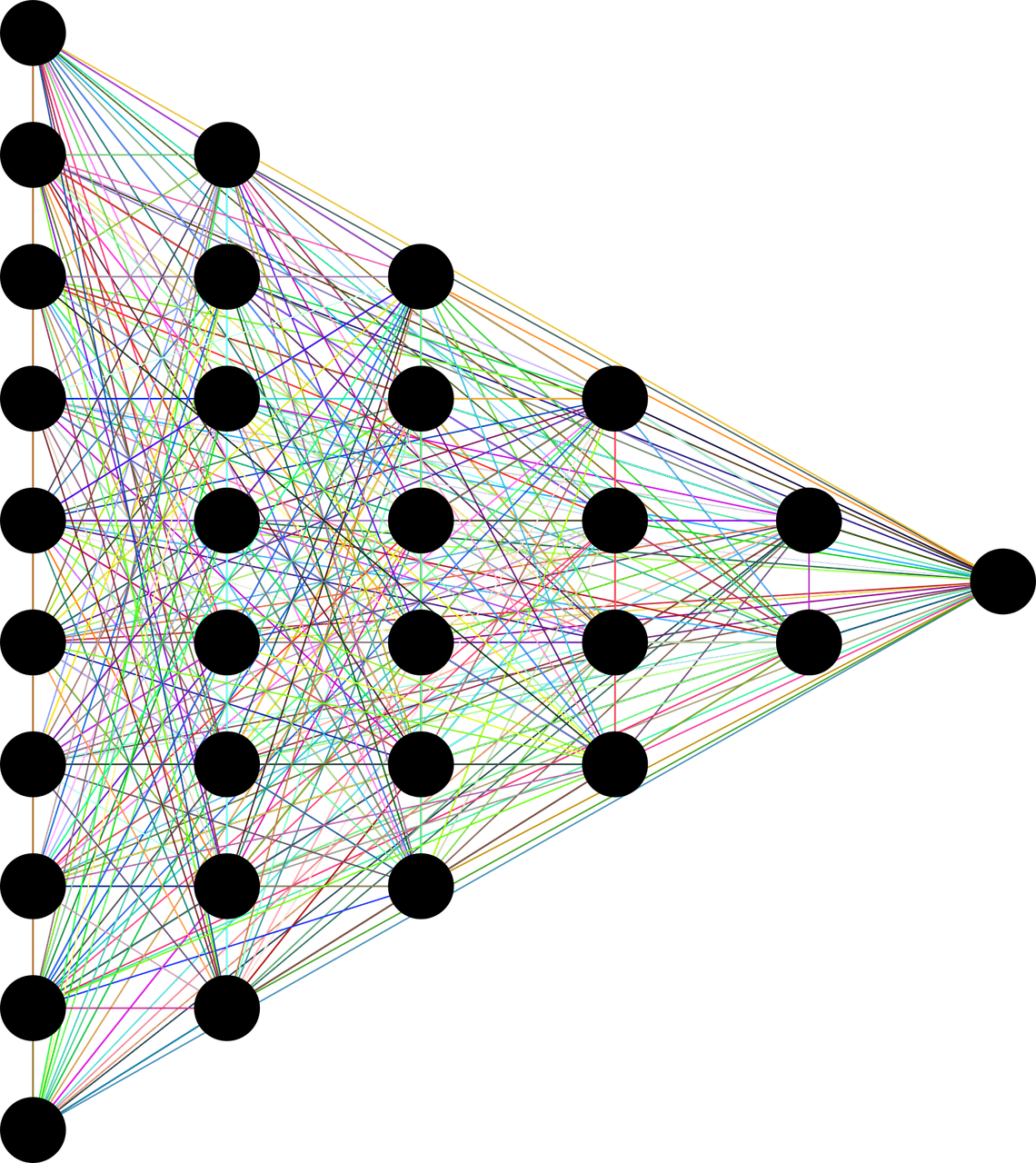

Deep learning is a subfield of machine learning and artificial intelligence which copy the way the human brain gains certain knowledge. It is a powerful set of techniques for learning in neural networks. The meaning of the ‘Deep’ in deep learning is referring to the depth of the layers in a neural network. A deep learning model is created with the help of a neural network that consists of artificial neurons.

A neural network is divided into three major layers input layer( it is the first layer of the neural network where we get input), hidden layer (it is the second layer of our neural network which help us to us accurate input on how much-hidden layer in our model that much accurate output we get) and output layer (This is our third or last layer where we get output).

How Deep learning works

A deep neural network is also referred to as a deep learning model because it uses neural network architectures for its research. A normal neural network model only consists of three layers Input layer, the hidden layer, and the output layer but a deep network can consist of as many as 150 hidden layers and the main thing is how much-hidden layers in our model that much accurate output we get.

We used deep learning algorithms to train our model (detection algorithms, such as radial-bias functions, multilayer perceptrons, etc, to eliminate the need to manually extract features). Visible layers are the input and output layers of a deep network. The input layer is where the deep learning model is get the data for processing, and the output layer is where the final prediction or classification gets.

After we gave input from the input layer, we used deep learning algorithms to train our model (algorithms such as conventional neural networks, radial-bias function, multilayer perceptron, etc, and the algorithms also eliminated the manual feature extraction). and then our model gives us an accurate output in this way deep learning is work. We also use backpropagation algorithms to calculate errors in predictions. (Backpropagation algorithms are like gradient descent, Boltzmann learning, Hebbian learning, etc)

Some Algorithms used in Deep Learning

- Convolutional Neural Networks.

- Feed Forward Network.

- Feed Back Network.

- Radial Basis Function Networks.

- Multi-layer Perceptrons

- Self Organizing Maps.

Convolutional Neural Network

The convolutional neural network consists of multiple layers which mainly used for image processing and object detection. CNN first, it is developed in 1988 that time it was called LeNet. CNN can identify characters such as ZIP codes and digits, as well as identify satellite images, medical images, forecast time series, etc.

Feed-forward Network

In a feed-forward network, the signal is traveled in only one direction from the input layer to the hidden layer to the output layer, and single only travels in one direction in this network that’s why is not give us an accurate input as other algorithms give us and they have fixed input and output. It is mostly used in pattern generation, pattern recognition, pattern classification, etc.

Feed-back Network

Feedback networks travel in both directions, so it gives more accurate results than feed-forward networks. However, because they travel in both directions, they also become complicated, and there is no equilibrium point in feedback networks. Feedback neural network architecture is also referred to as an interactive or recurrent network. it is used in content-addressable memories.

Radial Basis Function Network

On Radial basis, there are three layers: input, hidden, and output. In order to keep the hidden layer strictly one, it can only contain one layer.

On a Radial basis, the input layer acts as a source node that connects the network to its environment.

The Hidden layer provides a set of units of basis function and high dimensionality.

The output layer provides a linear combination of hidden functions that perform a simple weighted sum with a linear output.

For function approximation in Radial Basis function networks (matching of real numbers), the output is fine. Using sigmoid or hard limiters on neurons’ outputs can be useful for pattern classification.

Multi-layer perceptron

A multi-layer perceptron (MLP) is a feed-forward artificial neural network that generates a set of outputs from a set of inputs, characterized by several layers of input nodes connected as a directed graph.

In addition to backpropagation, MLP is a deep learning method that uses a nonlinear activation function on each node except the input node helps to minimize calculation errors. XOR gates, for example, are linearly nonseparable problems that need supervised learning. Some application that used MLP is Speech recognition, Image recognition Machine translation, etc.

Self Organizing Maps

- In 1982, T. Kohonen developed the self-organizing technique. A self-organizing system is one that learns on its own without any supervision, by using unsupervised learning. SOM maps multidimensional data into a lower-dimensional format, which makes complex problems easy to intercept.

- Reduces the dimensions of data.

- Trained by unsupervised learning.

- Group similar data items together with the help of clustering.

- Mapping multidimensional data onto a 2D map while preserving proximity relationships as well as possible.